Terraform (TF) Modules have become an increasingly popular and efficient way to configure a diversity of applications and infrastructure as code. They are a catalog of repeatable templates that help you deploy Terraform code quickly and consistently. The most popular tools, clouds, and platforms have a Terraform Module – some of which have been downloaded tens of millions of times.

The great part about Terraform Modules is that they’re quite flexible and extensible, enabling you to write your own custom modules for proprietary applications, as needed. There are some pretty great tutorials online that extensively cover the basics of getting started and writing your own Terraform Module, you can check out this post as a great reference for how to build your TF module.

When leveraging public-facing modules, like any other resources taken off the public web––whether open source tools and utilities or even container images, there are some good practices to keep in mind when selecting your module of choice, including:

- Ensuring it is well-maintained, with good security hygiene (e.g. quick patches for high-severity vulnerabilities)

- Maintains backwards compatibility even with upgrades, so that new versions don’t break anything

- Has a good release cycle management of major / minor versions

- Has a strong community - which is represented in stars, forks, and even how quickly issues are resolved in their repo

These can provide a good understanding of whether these modules are recommended for adoption in your stack, or whether they will cause you future heartache––so be sure to vet the modules you choose before you apply them to your code.

Advanced Use Cases with Terraform Modules

When it comes to creating our own modules, it’s best to start first with a design of your systems and decide the level of coverage you want for your modules. While modules are great for composability, collaboration and automation––like all code, each requires its own share of maintenance.

It is most certainly possible to create a module per component, whether it’s Lambda services, IAM users and roles, policies, and even backend and frontend components. However the decision to create a module should always be driven by the value and ROI in controlling the code being greater than the long-term maintenance and overhead of supporting the modules for perpetuity. If it’s easier to leverage a public resource, and customize it to your need, that may sometimes be sufficient, and is saves having to write the module from scratch.

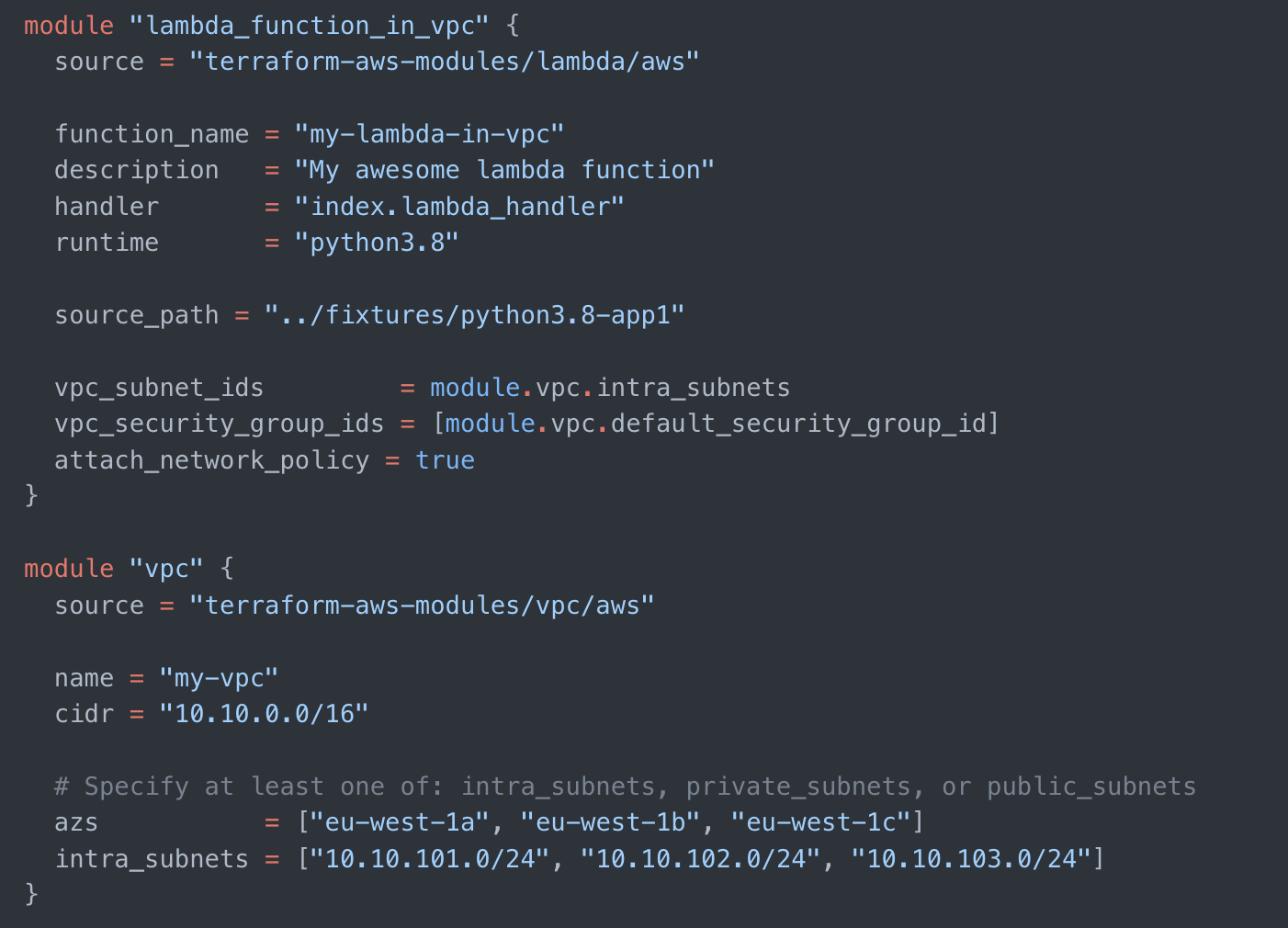

There are also plenty of modules that bundle components together, such as infrastructure/runtime, a database like RDS and IAM roles. Anton Babenko’s AWS module library is just one example of a well-maintained library of resources you can leverage for nearly every AWS use case and component bundle.

There are also situations where modules are great provisioning tools, but when it comes to connecting multiple and diverse providers there may be additional complexity to take into consideration. Let’s take the use case of MongoDB Atlas on AWS with VPC Peering, in this case you would need to ensure that your modules are connected from both sides, MongoDB to AWS and AWS to MongoDB, to ensure they work properly.

The good part is that Terraform Modules have a great community and developer tools, so that even more advanced use cases are well-documented. That said, there is also the reverse scenario where leveraging modules can actually be the best practice, and prevent poor operations hygiene like infrastructure drift and other issues, but may sometimes be an afterthought.

Leveraging Modules for ClickOps Components

All of the examples above are great when we start by creating our resources as code in the cloud. But what happens when we have manually created resources in the cloud via ClickOps? This is a very common practice in operations, particularly with resources created pre-infrastructure as code. What if later we want to leverage a module, particularly a bundled module, that is much more aligned with best practices? How can this be done without causing breakage?

Let's take an example of creating an S3 bucket manually. In our scenario, this manually created resource will not have encryption, versioning, ACL (access lists), or anything else. Therefore, if we'd like to switch to using a TF Module that has these components, it won't match our current, manually created bucket's configuration. In such a scenario, will we now be stuck with our manually provisioned resource forever that has no encryption or versioning?

This is exactly the type of situation where we can create drift, if our code and resources are not aligned, which can cause volatility in our clouds. So what’s a good way to overcome this?

The first step is to actually convert our resource to code, and then upload it to the Terraform state file. Ok - good! But this is still a bare bones resource with none of the additional featuers such as versioning. The next thing you can do is import the module into your newly created Terraform code. This way you import all of the added configuration that you want to apply to your S3 bucket, without having to write it yourself.

The next time you run `terraform apply` it will prompt you with the many changes you’re making to your bucket, and you will just need to confirm that these are OK. Once the changes apply, your bucket will be upgraded with the additional functionality you wanted to apply to your resource.

That’s great for one-off resources created manually, but how do we do this at scale? What if we have hundreds of manually provisioned S3 buckets with no encryption, ACL or versioning? What now?

EUREKA! This is where a tool like Firefly comes in. It enables you to select all of the resources you’d like to update, select a publicly available module or even upload a custom module, and apply the changes to all of your resources that require upgrading / changing / modification at once.

See it in action:

RUN:

You can see in the code the diff between your existing resource and the imported module:

Once you click `apply` (like in the example with the single resource), you can then update all of the selected resources with one single action. This method enables you to write, import, and govern your manually created resources at scale, with minimal pain.

TL;DR - Terraform Modules Made Easy

So just to wrap it up, Terraform Modules have changed the game for codifying resources, bundling services, components, and enabling easy cloud infrastructure governance in the long run. The framework makes it truly simple to connect more common and proprietary resources through publicly available and maintained modules alongside custom-built modules (that take some research, but are eventually pretty easy to create, configure and maintain). Terraform Modules are a great way to ensure your clouds are aligned with best practices, and they can still be leveraged for resources that were not created as code.

It is most certainly recommended to upgrade manually created resources to infrastructure as code, to gain all of the benefits derived from codified resources––security, policy and governance, automation, and extensibility. You can also leverage some great tools to make these transitions at scale, and not leave any resources unmanaged in your clouds.